Melanoma is the most serious type of skin cancer because it can spread rapidly, making early detection crucial. If a person has many naturally occurring moles, their doctor may suggest total-body photography to track their growth over time.

Johns Hopkins researchers are exploring the potential of computer vision to track skin lesions across multiple scans from different patient visits, a novel approach they say promises to identify lesions that the human eye can miss.

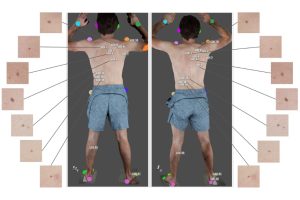

In their new work, the researchers use geometry and texture analysis to track skin abnormalities via a 3D textured mesh. According to the team—which includes researchers from the Department of Computer Science, the School of Medicine’s Department of Dermatology, and the Department of Orthopaedic Surgery at the Johns Hopkins Hospital, as well as collaborators from Lumo Imaging and the Eunice Kennedy Shriver National Institute of Child Health and Human Development—their novel framework can find corresponding skin lesions regardless of changes in body pose and camera view, which is critical when it can’t be guaranteed that a patient will be standing in the exact same position for each and every body scan.

The researchers’ method begins by identifying key points on the body to estimate the location of a skin lesion that was identified previously on an earlier scan. They then use details such as size, shape, and texture to more precisely locate the lesion on the new scan.

“Imagine that we want to look for a house in New York City. If we’re given the locations of some landmarks, like the Empire State Building, and how far the house is from those landmarks, we know the approximate region the house is located in,” explains Wei-Lun Huang, a doctoral candidate in the Department of Computer Science and a member of the Laboratory for Computational Sensing and Robotics’ Biomechanical and Image-Guided Surgical Systems Lab. He is advised by senior co-authors Mehran Armand—a professor of orthopaedic surgery with joint appointments in Mechanical Engineering and Computer Science—and Misha Kazhdan, a professor of computer science.

“The texture information we utilize is similar to using a picture of the house from Google Street View to narrow down our search,” Huang continues. “But what if the initial region doesn’t include the house we want, or what if there are multiple similar houses nearby?”

To make sure they’ve found the correct lesion on the new total-body scan, the researchers measure how similar it is to the lesion they’re looking for in terms of texture similarity, landmark alignment, and uniqueness to the region it’s located in.

“If the lesion correspondence is found with confidence, we add it as a new landmark to help find the correspondence of the remaining lesions of interest,” says Huang.

The researchers evaluated their framework on private and public datasets with success rates comparable to those of a state-of-the-art method.

Their next steps involve addressing limitations that naturally arise from human data. For example, their localization method currently struggles with the sudden addition of new tattoos, low-quality scans, dramatic changes in pose, and significant weight change in patients.

“Our ultimate goal is automatic full-body lesion detection to allow for automated longitudinal skin lesion tracking,” says Huang. “This will allow our method to suggest that physicians pay particular attention to a lesion that has evolved abnormally.”

Their research was presented at the 26th International Conference on Medical Image Computing and Computer Assisted Intervention held October 8–12 in Vancouver, Canada.

Learn more about their work here.