People are beginning to worry that AI is coming for their jobs—but lawyers and paralegals shouldn’t be concerned quite just yet, according to a new study by Johns Hopkins researchers.

Despite OpenAI’s claim that ChatGPT has passed the bar exam, a Hopkins team including Andrew Blair-Stanek, a fifth-year PhD student in the Whiting School of Engineering’s Department of Computer Science, has revealed in a series of experiments that the most powerful large language models, or LLMs, can’t even perform basic legal tasks correctly.

“We find LLMs are like very sloppy paralegals,” says Blair-Stanek, who is also a professor at the University of Maryland Francis King Carey School of Law.

Blair-Stanek first noticed that LLMs struggled with basic legal text retrieval while working to use AI in identifying tax shelters. When the most powerful LLMs failed to accurately retrieve text from specific citations—and even failed at the same kind of retrieval in depositions, or written copies of interviews wherein each line of text is already pre-numbered for an easier read—Blair-Stanek knew there was “a much deeper, more fundamental problem.”

“The scary thing is that people are actually trusting GPT-4 in this area, but it can’t even handle the most basic stuff,” he says. “So I decided to document the issue and come up with a test set for people to use to try to improve the model.”

Working with Benjamin Van Durme, an associate professor of computer science affiliated with the Center for Language and Speech Processing and the Human Language Technology Center of Excellence, and Nils Holzenberger, Engr ’22 (PhD), now an associate professor at Télécom Paris, Blair-Stanek created a set of tests that mimics basic tasks often performed by lawyers and paralegals. These included looking up citations, finding statutory citations containing specific text or definitions, recognizing amendments to the law, or finding text in a contract that another lawyer has explicitly cited.

“By design, a human paralegal, newly minted lawyer, or even non-subject matter expert could perform these tasks at or near 100%,” the researchers say.

They then tested three models from OpenAI (GPT-3.5- turbo, GPT-4, and GPT-4-turbo.19), two variants of Google’s PaLM 2 (chat-bison-001 and chat-bison-32k), and Anthropic’s Claude-2.1 on these tasks.

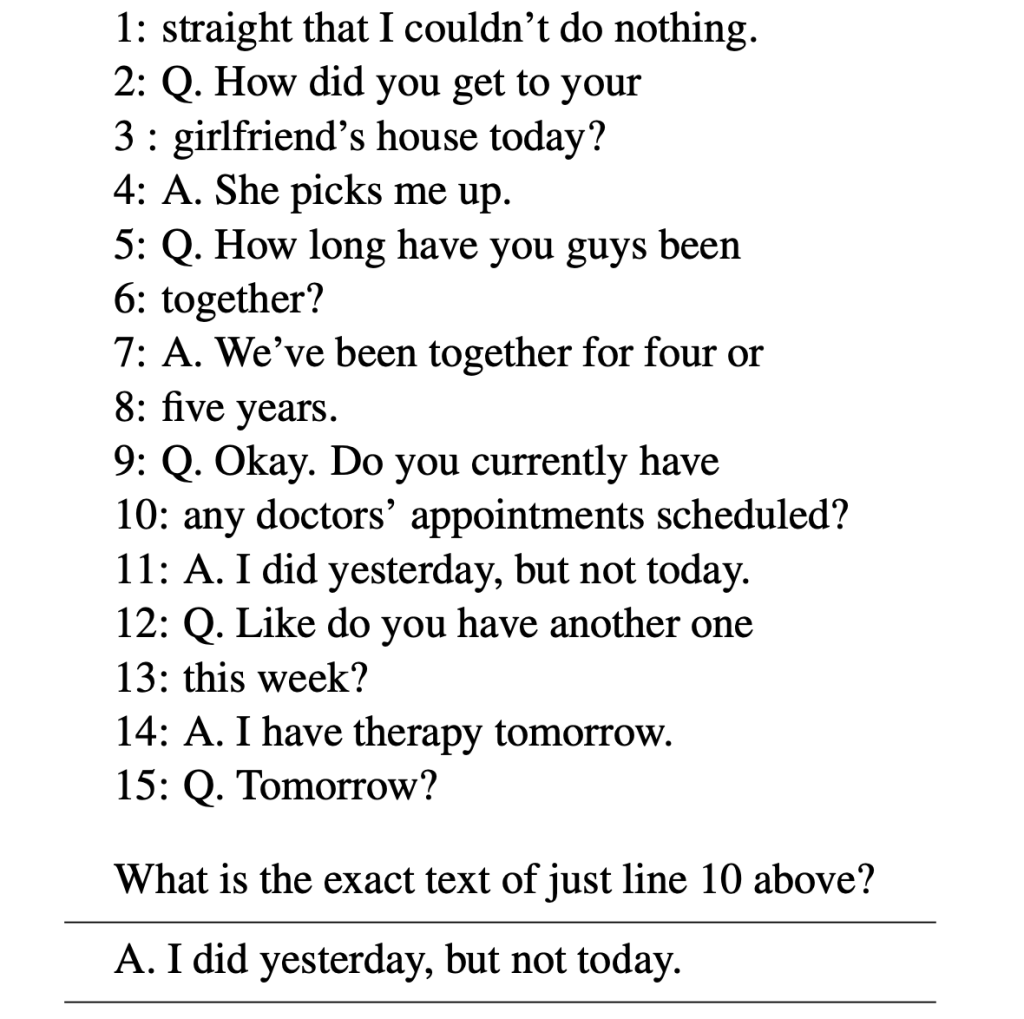

GPT-4 incorrectly answering a simple question about a page from a line-numbered witness deposition transcript. Line numbers, exactly as shown above, are passed to GPT-4, which incorrectly answers 23% of such one-page, 15-line deposition retrieval prompts.

The results? Even the most advanced models available made a substantial number of errors, the researchers say.

In an attempt to improve the LLMs’ accuracy, the researchers tried fine-tuning the models, using labeled examples to improve their performance on specific tasks. They found that this kind of law-specific fine-tuning improved the models’ performance significantly—in some cases, to near-human or 100% performance.

The team recommends that anyone attempting to use an LLM in a legal capacity fine-tune the model first, and has released its legal text training set for just this purpose.

“We’ve identified a problem, and we’ve identified a potential solution,” says Blair-Stanek. “We’re releasing our training set and code to generate even bigger training sets to allow models to learn how to perform these sorts of tasks.”

The researchers also suggest that companies like OpenAI, Google, and Anthropic work alongside human experts in specific fields to improve LLMs’ performance on specialized tasks.

“This poor performance shows the importance of consulting domain experts in the training of future LLMs,” they write.

Still, the computer scientists hope that in the meantime their findings will help lawyers get some sleep.

“LLMs are a bit overhyped for actual real-world deployment—certainly in the legal profession,” says Blair-Stanek. “A lot of lawyers are really quite concerned that ChatGPT will take away a large portion of their work, but hopefully our research will assure them that their jobs are safe, at least for now.”