| |

|

Context-Aware Surgical Assistance for Virtual Mentoring

People - Dr. Gregory Hager, Balazs Vagvolgyi (ERC CISST)

Description

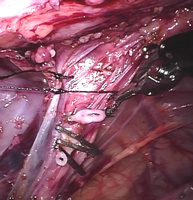

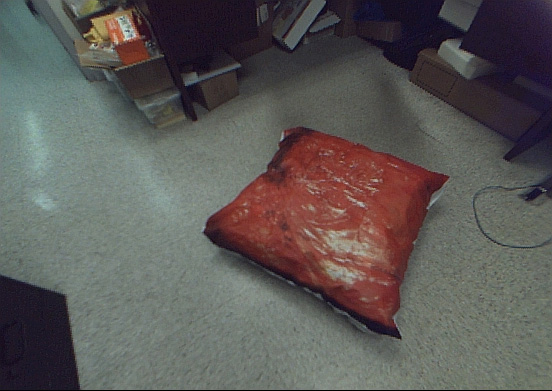

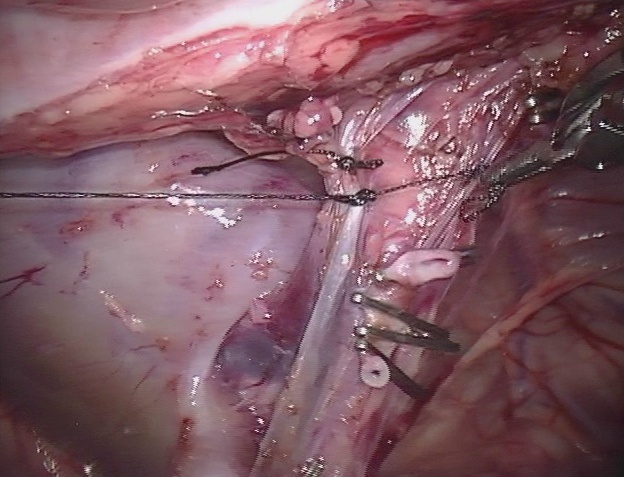

Minimally invasive surgery (MIS) is a technique whereby instruments are inserted into the body via small incisions (or in some cases natural orifices), and surgery is carried out under video guidance. While presenting great advantages for the patient, MIS presents numerous challenges for the surgeon due to the restricted field of view presented by the endoscope, the tool motion constraints imposed by the insertion point, and the loss of haptic feedback. One means of overcoming some of these limitations is to present the surgeon with a registered three-dimensional overlay of information tied to pre-operative or intraoperative volumetric data. This data can provide guidance and feedback on the location of subsurface structures not apparent in endoscopic video data.

Our objective is to develop algorithms for highly capable context-aware surgical assistant (CASA) robotic systems that are able to maintain a dynamically updated model of the surgical field and ongoing surgical procedure for the purposes of assistance, evaluation, and mentoring. Information that must be maintained includes real-time representation of the patient’s anatomy & physiology, the relationship of surgical instruments to the patient’s anatomy, and the progress of the procedure relative to the surgical plan. In the case of telesurgical systems, one key challenge is the real-time fusion of preoperative models and images with stereo video and real-time motion of surgical instruments.

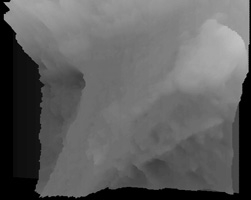

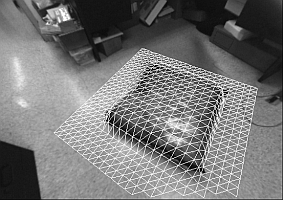

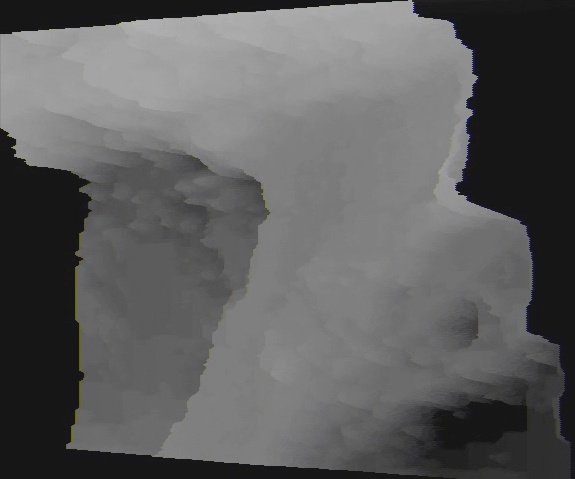

Our approach dynamically registers pre-operative volume data to surfaces extracted from video, and thus permits the surgeon to view pre-operative high-resolution CT or MRI or intra-operative ultrasound scans directly on tissue surfaces. By doing so, operative targets and pre-operative plans will become clearly apparent.

We are developing an integrated demonstration of the feasibility of performing such a fusion within the context of a CASA robotic system and we will demonstrate simple capabilities to use the fused information to monitor interactions of the surgical instruments with the target anatomy and detect changes in the anatomy.

Our project is currently targeting minimally invasive surgery using the da Vinci surgical robot. The da Vinci system provides the surgeon with a stereoscopic view of the surgical field. The surgeon operates by moving two master manipulators which are linked to two (or more) patient-side manipulators. Thus, the surgeon is able to use his or her natural 3D hand-eye coordination skills to perform delicate manipulations in extremely confined areas of the body.

For the CASA project, the robotic surgery system presents several advantages. First, it provides stereo (as opposed to the more common monocular) data from the surgical field. Second, through the da Vinci API, we are able to acquire motion data from the master and slave manipulators, thus providing complete information on the motion of the surgical tools. Finally, we are also provided with motion information on the observing camera itself, making it possible to anticipate and compensate for ego-motion.

Algorithms are developed and evaluated on phantom data and data acquired using the Intuitive Surgical da Vinci system.

PUBLICATIONS

- Gregory Hager, Balazs Vagvolgyi and David Yuh. Stereoscopic Video Overlay with Deformable Registration. Medicine Meets Virtual Reality, 2007.

VIDEOS

Quicktime player or mp4 compatible player required

Pig Source.mp4 Pig Source.mp4

Pig Depth Map View.mp4 Pig Depth Map View.mp4

Pig deformable registration shading.mp4 Pig deformable registration shading.mp4

YouTube video on our stereo reconstruction

|

|